Answer Engine Optimization: Surviving the “Zero-Click” Reality in 2025

The contract was simple for twenty years: creators provided the content, and Google provided the…

In 2023, building an AI application was a practice in “prompt engineering.” You wrote a poem to a Large Language Model (LLM), crossed your fingers, and hoped the output didn’t hallucinate. It was alchemy—impressive, but unrepeatable.

By 2026, the alchemy has hardened into chemistry. The era of the “AI Wrapper”—a thin UI pasted over an OpenAI API call—is dead. It has been replaced by the Agentic Stack: a robust, standardized, and increasingly complex set of infrastructure designed not just to generate text, but to execute work.

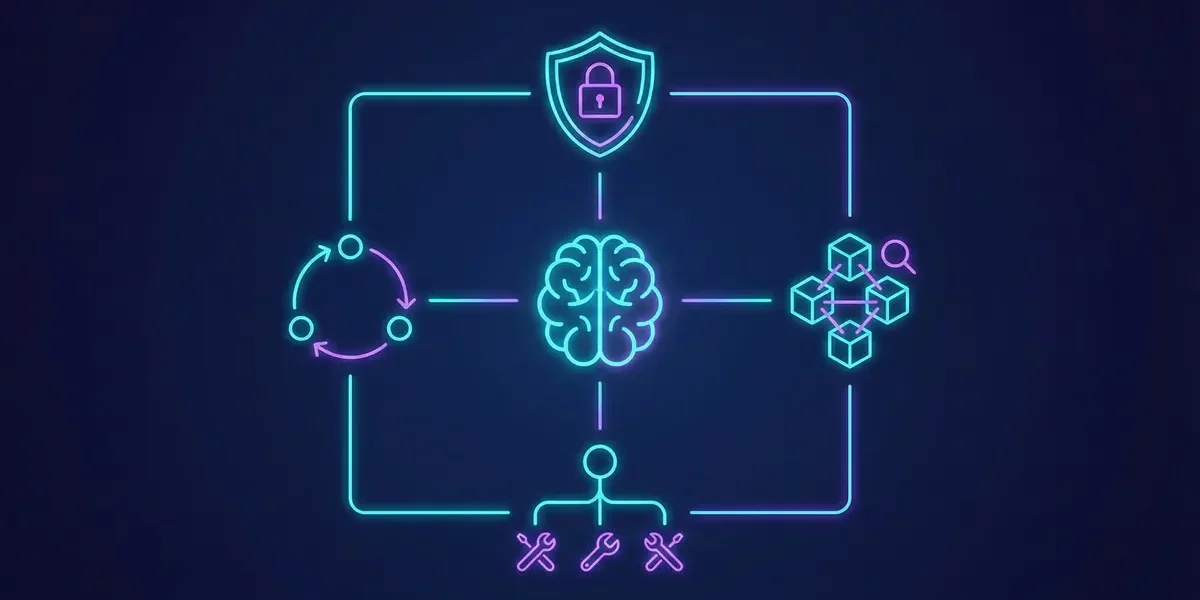

For CIOs and engineering leaders, the question is no longer “Which model should we use?” (a commodity decision) but “What does our runtime look like?” The architecture of autonomy has arrived, and it looks nothing like the software stacks of the last decade.

Here is the anatomy of the new runtime.

For years, the biggest bottleneck in AI development was the “N×M” problem. If you wanted your AI to talk to Google Drive, Salesforce, and Slack, you had to write custom API wrappers for each one. If you switched models—say, from GPT-4 to Claude 3.5—you often had to rewrite that glue code.

Enter the Model Context Protocol (MCP).

Introduced by Anthropic and rapidly adopted as an open standard, MCP is effectively the “USB-C” of the AI world. It decouples the model from the tools. Instead of hard-coding a “Salesforce Integration” into your bot, you simply point your agent at an MCP Server running on your Salesforce instance.

The MCP standardizes three things:

This has profound implications. It means your data sources are no longer “plug-ins” but portable servers. You can swap the brain (the LLM) without changing the hands (the tools). In 2026, a “Data Warehouse” isn’t just for storage; it’s an active participant that exposes its own MCP server, ready to be queried by any authorized agent in the company.

In the early days (2024), we built “Chains.” A chain is a linear sequence: Step A -> Step B -> Step C. This works for simple tasks, but it fails catastrophically in the real world. Real work is messy. It involves loops, retries, and conditional logic.

This reality birthed the Graph-Based Orchestration engines, with LangGraph (emerging from the LangChain ecosystem) and CrewAI leading the market.

Unlike a chain, a graph allows for cycles. Consider a “Code Writer” agent. In a linear chain, it writes code and stops. In a graph architecture:

This loop continues until the condition (Success) is met. This is the technical definition of “Agency”—the ability to perceive errors and self-correct without human intervention.

We are also seeing distinct “organizational charts” for software:

Retrieval-Augmented Generation (RAG)—the practice of letting AI read your documents—has undergone a massive upgrade.

Standard RAG was a glorified keyword search. You asked a question, it found similar paragraphs in a database, and summarized them. It failed if the answer required synthesizing data from three different documents.

Agentic RAG introduces a “Planner” before the search. When you ask, “How did our Q3 marketing spend compare to Q2 revenue growth?” an Agentic RAG system doesn’t just search. It plans:

Agents also need to remember you. Vector databases (like Pinecone or Weaviate) handle semantic knowledge, but Episodic Memory is the new frontier. Tools like Mem0 or Zep provide a “knowledge graph” of the user’s history. If you tell your agent, “I hate Python, use Rust,” it doesn’t just store that text. It updates a stateful profile: User_Preference: {Language: Rust, Avoid: Python}. Six months later, it will still refuse to write Python, because that memory is pinned to its state.

The most terrifying moment for an AI engineer in 2026 is not a sentient robot; it is the API Bill.

Autonomous loops are expensive. An agent stuck in a “retry loop”—trying to fix a bug, failing, and trying again 5,000 times in a minute—can burn through a monthly budget in an hour.

This has created a new discipline: Agentic FinOps. Observability platforms like Helicone, LangSmith, and Arize now track “Cost Per Goal” rather than just “Cost Per Token.”

We are seeing the implementation of Circuit Breakers at the infrastructure level:

The technology stack described above is not just IT plumbing; it is the new operating system of the modern enterprise.

The companies that win in the latter half of this decade will not be the ones with the best prompts. They will be the ones with the most robust Runtime. They will be the organizations that have successfully decoupled their data (MCP), architected resilient graphs (LangGraph), and built the governance rails to let these systems run safely at night.

We are done playing with chatbots. It is time to build the machine.

The contract was simple for twenty years: creators provided the content, and Google provided the…

You’ve done everything right. You have a fantastic product. You’ve built a beautiful, lightning-fast WooCommerce…

The notification didn’t ping your phone. There was no urgent Slack message, no 3:00 AM…